methodology

It’s again the season for nine lessons, I suppose. So, on the occasion of Christmas Eve, let me rephrase the nine lessons I once gave (with my tongue firmly lodged in my cheek) to those who want to make a pseudo-scientific career in so-called alternative medicine (SCAM) research.

- Throw yourself into qualitative research. For instance, focus groups are a safe bet. They are not difficult to do: you gather 5 -10 people, let them express their opinions, record them, extract from the diversity of views what you recognize as your own opinion and call it a ‘common theme’, and write the whole thing up, and – BINGO! – you have a publication. The beauty of this approach is manifold:

-

- you can repeat this exercise ad nauseam until your publication list is of respectable length;

- there are plenty of SCAM journals that will publish your articles;

- you can manipulate your findings at will;

- you will never produce a paper that displeases the likes of King Charles;

- you might even increase your chances of obtaining funding for future research.

- Conduct surveys. They are very popular and highly respected/publishable projects in SCAM. Do not get deterred by the fact that thousands of similar investigations are already available. If, for instance, there already is one describing the SCAM usage by leg-amputated policemen in North Devon, you can conduct a survey of leg-amputated policemen in North Devon with a medical history of diabetes. As long as you conclude that your participants used a lot of SCAMs, were very satisfied with it, did not experience any adverse effects, thought it was value for money, and would recommend it to their neighbour, you have secured another publication in a SCAM journal.

- In case this does not appeal to you, how about taking a sociological, anthropological or psychological approach? How about studying, for example, the differences in worldviews, the different belief systems, the different ways of knowing, the different concepts about illness, the different expectations, the unique spiritual dimensions, the amazing views on holism – all in different cultures, settings or countries? Invariably, you must, of course, conclude that one truth is at least as good as the next. This will make you popular with all the post-modernists who use SCAM as a playground for enlarging their publication lists. This approach also has the advantage to allow you to travel extensively and generally have a good time.

- If, eventually, your boss demands that you start doing what (in his narrow mind) constitutes ‘real science’, do not despair! There are plenty of possibilities to remain true to your pseudo-scientific principles. Study the safety of your favourite SCAM with a survey of its users. You simply evaluate their experiences and opinions regarding adverse effects. But be careful, you are on thin ice here; you don’t want to upset anyone by generating alarming findings. Make sure your sample is small enough for a false negative result, and that all participants are well-pleased with their SCAM. This might be merely a question of selecting your patients wisely. The main thing is that your conclusions do not reveal any risks.

- If your boss insists you tackle the daunting issue of SCAM’s efficacy, you must find patients who happened to have recovered spectacularly well from a life-threatening disease after receiving your favourite form of SCAM. Once you have identified such a person, you detail her experience and publish this as a ‘case report’. It requires a little skill to brush over the fact that the patient also had lots of conventional treatments, or that her diagnosis was never properly verified. As a pseudo-scientist, you will have to learn how to discretely make such details vanish so that, in the final paper, they are no longer recognisable.

- Your boss might eventually point out that case reports are not really very conclusive. The antidote to this argument is simple: you do a large case series along the same lines. Here you can even show off your excellent statistical skills by calculating the statistical significance of the difference between the severity of the condition before the treatment and the one after it. As long as this reveals marked improvements, ignores all the many other factors involved in the outcome and concludes that these changes are the result of the treatment, all should be tickety-boo.

- Your boss might one day insist you conduct what he narrow-mindedly calls a ‘proper’ study; in other words, you might be forced to bite the bullet and learn how to do an RCT. As your particular SCAM is not really effective, this could lead to serious embarrassment in the form of a negative result, something that must be avoided at all costs. I, therefore, recommend you join for a few months a research group that has a proven track record in doing RCTs of utterly useless treatments without ever failing to conclude that it is highly effective. In other words, join a member of my ALTERNATIVE MEDICINE HALL OF FAME. They will teach you how to incorporate all the right design features into your study without the slightest risk of generating a negative result. A particularly popular solution is to conduct a ‘pragmatic’ trial that never fails to produce anything but cheerfully positive findings.

- But even the most cunningly designed study of your SCAM might one day deliver a negative result. In such a case, I recommend taking your data and running as many different statistical tests as you can find; chances are that one of them will produce something vaguely positive. If even this method fails (and it hardly ever does), you can always focus your paper on the fact that, in your study, not a single patient died. Who would be able to dispute that this is a positive outcome?

- Now that you have grown into an experienced pseudo-scientist who has published several misleading papers, you may want to publish irrefutable evidence of your SCAM. For this purpose run the same RCT over again, and again, and again. Eventually, you want a meta-analysis of all RCTs ever published (see examples here and here). As you are the only person who conducted studies on the SCAM in question, this should be quite easy: you pool the data of all your dodgy trials and, bob’s your uncle: a nice little summary of the totality of the data that shows beyond doubt that your SCAM works and is safe.

Didier Raoult, the French scientist who became well-known for his controversial stance on hydroxychloroquine for treating COVID-19, has featured on this blog before (see here, here, and here). Less well-known is the fact that he has attracted controversy before. In 2006, Raoult and 4 co-authors were banned for one year from publishing in the journals of the American Society for Microbiology (ASM), after a reviewer for Infection and Immunity discovered that four figures from the revised manuscript of a paper about a mouse model for typhus were identical to figures from the originally submitted manuscript, even though they were supposed to represent a different experiment. In response, Raoult “resigned from the editorial board of two other ASM journals, canceled his membership in the American Academy of Microbiology, ASM’s honorific leadership group, and banned his lab from submitting to ASM journals”. In response to Science covering the story in 2012, he stated that, “I did not manage the paper and did not even check the last version”. The paper was subsequently published in a different journal.

Now, the publisher PLOS is marking nearly 50 articles by Didier Raoult, with expressions of concern while it investigates potential research ethics violations in the work. PLOS has been looking into more than 100 articles by Raoult and determined that the issues in 49 of the papers, including reuse of ethics approval reference numbers, warrant expressions of concern while the publisher continues its inquiry.

In August of 2021, Elisabeth Bik wrote on her blog about a series of 17 articles from IHU-Méditerranée Infection that described different studies involving homeless people in Marseille over a decade, but all listed the same institutional ethics approval number. Bik and other commenters on PubPeer have identified ethical concerns in many other papers, including others in large groups of papers with the same ethical approval numbers. Subsequently, Bik has received harassment and legal threats from Raoult.

David Knutson, senior manager of communications for PLOS, sent ‘Retraction Watch’ this statement:

PLOS is issuing interim Expressions of Concerns for 49 articles that are linked to researchers affiliated with IHU-Méditerranée Infection (Marseille, France) and/or the Aix-Marseille University, as part of an ongoing case that involves more than 100 articles in total. Many of the papers in this case include controversial scientist Didier Raoult as a co-author.

Several whistleblowers raised concerns about articles from this institute, including that several ethics approval reference numbers have been reused in many articles. Our investigation, which has been ongoing for more than a year, confirmed ethics approval reuse and also uncovered other issues including:

- highly prolific authorship (a rate that would equate to nearly 1 article every 3 days for one or more individuals), which calls into question whether PLOS’ authorship criteria have been met

- undeclared COIs with pharmaceutical companies

To date, PLOS has completed a detailed initial assessment of 108 articles in total and concluded that 49 warrant an interim Expression of Concern due to the nature of the concerns identified. We’ll be following up with the authors of all articles of concern in accordance with COPE guidance and PLOS policies, but we anticipate it will require at least another year to complete this work.

Raoult is a coauthor on 48 of the 49 papers in question. This summer, Raoult retired as director of IHU-Méditerranée Infection, the hospital and research institution in Marseille that he had overseen since 2011, following an inspection by the French National Agency for the Safety of Medicines and Health Products (ANSM) that found “serious shortcomings and non-compliances with the regulations for research involving the human person” at IHU-Méditerranée Infection and another Marseille hospital. ANSM imposed sanctions on IHU-Méditerranée Infection, including suspending a research study and placing any new research involving people under supervision, and called for a criminal investigation. Other regulators have also urged Marseille’s prosecutor to investigate “serious malfunctions” at the research institution.

Pierre-Edouard Fournier, the new director of IHU-Méditerranée Infection, issued a statement on September 7th that said he had “ensured that all clinical trials in progress relating to research involving the human person (RIPH) were suspended pending the regularization of the situation.” Also in September, the American Society for Microbiology placed expressions of concern on 6 of Raoult’s papers in two of its journals, citing “a ‘scientific misconduct investigation’ by the University of Aix Marseille,” where the researcher also has an affiliation.

___________________________

Christian Lehman predicted on my blog that ” If Covid19 settles in the long-term, he [Raoult] will not be able to escape a minutely detailed autopsy of his statements and his actions. And the result will be devastating.” It seems he was correct.

Hardly a day goes by that I am not asked by someone – a friend, colleague, practitioner, journalist, etc. – about the evidence for this or that so-called alternative medicine (SCAM). I always try my best to give a truthful answer, and often it amounts to something like this: TO THE BEST OF MY KNOWLEDGE, THERE IS NO GOOD EVIDENCE TO SHOW THAT IT WORKS.

The reactions to this news vary, e.g.:

- Some ignore it and seem to think ‘what does he know?’.

- Some thank me and make their decisions accordingly.

- Some feel they better do a fact-check.

The latter reaction is perhaps the most interesting because often the person, clearly an enthusiast of that particular SCAM, later comes back to me and triumphantly shows me evidence that contradicts my statement.

This means I now must have a look at what evidence he/she has found.

It can fall into several categories:

- Opinion articles published by proponents of the SCAM in question.

- Papers that are not truly relevant to the SCAM.

- Research that provides data about the SCAM that does not relate to its effectiveness, e.g. surveys, or qualitative studies.

- Studies of the SCAM in question.

It is usually easy to explain why the three first-named categories are irrelevant. Yet, the actual studies can be a problem. Remember, I told that person that no good evidence exists, and now he (let’s assume I am dealing with a man) proudly shows me a study of it suggesting the opposite. There might be the following explanations:

- I did not know this high-quality study (e.g. because it is new) and my dismissive statement was thus questionable or wrong.

- The study draws a positive conclusion about the SCAM but this conclusion is not justified.

In the first instance, do I need to change my mind and apologize for my wrong statement? Perhaps! But I also need to explain that, even with a rigorous study, we really ought to have one (better more than one) independent replication before we start changing our clinical routine.

In the second instance, I need to explain why the conclusion is not justified. The realm of SCAM is plagued by studies with misleading conclusions (as regular readers of this blog know only too well). Therefore, this situation arises with some regularity. There are numerous reasons why a study can generate unreliable findings (as regular readers of this blog know only too well). Some of them are easy to understand others might be more difficult for non-scientists to comprehend. This means that the discussions with the man who proudly brought the ‘evidence’ to my attention can be tedious.

Often he feels that I am unfair to his favorite SCAM. He might argue that:

- I am biased;

- I lack an open mind;

- I am not qualified;

- I am changing the goalpost;

- I am applying double standards because much of the research into conventional medicine is also not flawless.

In such cases, we are likely to eventually end our discussions by agreeing to disagree. He will be convinced of his point of view and I will be convinced of mine. Essentially, we are more or less where we started, and the whole palaver was for nothing.

… a bit like this post?

I hope not!

What I have been trying to demonstrate is that:

- SCAM enthusiasts are often difficult, sometimes impossible to convince;

- research is not always easy to understand and requires a minimum of education and know-how.

Is acupuncture more than a theatrical placebo? Acupuncture fans are convinced that the answer to this question is YES. Perhaps this paper will make them think again.

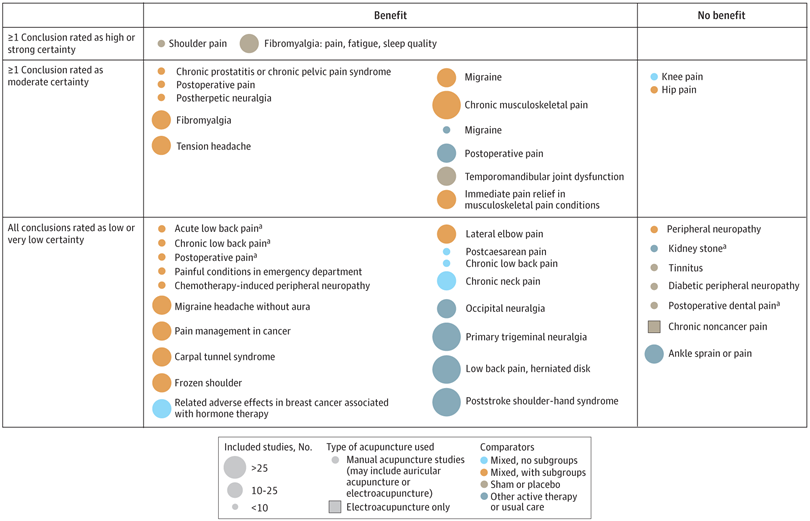

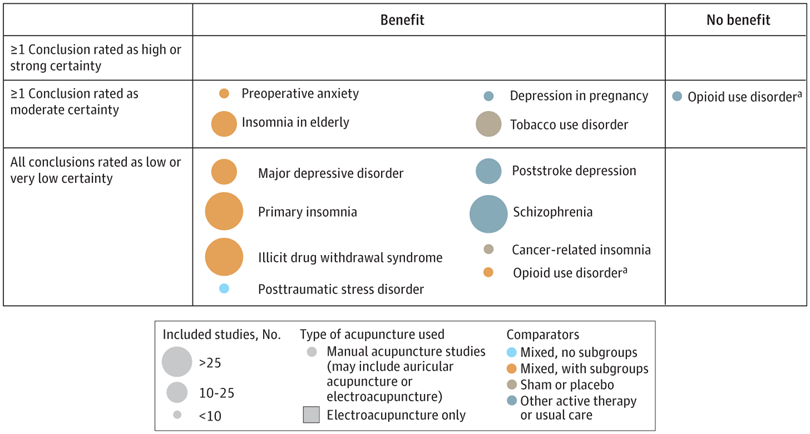

A new analysis mapped the systematic reviews, conclusions, and certainty or quality of evidence for outcomes of acupuncture as a treatment for adult health conditions. Computerized search of PubMed and 4 other databases from 2013 to 2021. Systematic reviews of acupuncture (whole body, auricular, or electroacupuncture) for adult health conditions that formally rated the certainty, quality, or strength of evidence for conclusions. Studies of acupressure, fire acupuncture, laser acupuncture, or traditional Chinese medicine without mention of acupuncture were excluded. Health condition, number of included studies, type of acupuncture, type of comparison group, conclusions, and certainty or quality of evidence. Reviews with at least 1 conclusion rated as high-certainty evidence, reviews with at least 1 conclusion rated as moderate-certainty evidence and reviews with all conclusions rated as low- or very low-certainty evidence; full list of all conclusions and certainty of evidence.

A total of 434 systematic reviews of acupuncture for adult health conditions were found; of these, 127 reviews used a formal method to rate the certainty or quality of evidence of their conclusions, and 82 reviews were mapped, covering 56 health conditions. Across these, there were 4 conclusions that were rated as high-certainty evidence and 31 conclusions that were rated as moderate-certainty evidence. All remaining conclusions (>60) were rated as low- or very low-certainty evidence. Approximately 10% of conclusions rated as high or moderate-certainty were that acupuncture was no better than the comparator treatment, and approximately 75% of high- or moderate-certainty evidence conclusions were about acupuncture compared with a sham or no treatment.

Three evidence maps (pain, mental conditions, and other conditions) are shown below

The authors concluded that despite a vast number of randomized trials, systematic reviews of acupuncture for adult health conditions have rated only a minority of conclusions as high- or moderate-certainty evidence, and most of these were about comparisons with sham treatment or had conclusions of no benefit of acupuncture. Conclusions with moderate or high-certainty evidence that acupuncture is superior to other active therapies were rare.

These findings are sobering for those who had hoped that acupuncture might be effective for a range of conditions. Despite the fact that, during recent years, there have been numerous systematic reviews, the evidence remains negative or flimsy. As 34 reviews originate from China, and as we know about the notorious unreliability of Chinese acupuncture research, this overall result is probably even more negative than the authors make it out to be.

Considering such findings, some people (including the authors of this analysis) feel that we now need more and better acupuncture trials. Yet I wonder whether this is the right approach. Would it not be better to call it a day, concede that acupuncture generates no or only relatively minor effects, and focus our efforts on more promising subjects?

Today, you cannot read a newspaper or listen to the radio without learning that there has been a significant, sensational, momentous, unprecedented, etc. breakthrough in the treatment of Alzheimer’s disease. The reason for all this excitement (or is it hype?) is this study just out in the NEJM:

BACKGROUND

The accumulation of soluble and insoluble aggregated amyloid-beta (Aβ) may initiate or potentiate pathologic processes in Alzheimer’s disease. Lecanemab, a humanized IgG1 monoclonal antibody that binds with high affinity to Aβ soluble protofibrils, is being tested in persons with early Alzheimer’s disease.

METHODS

We conducted an 18-month, multicenter, double-blind, phase 3 trial involving persons 50 to 90 years of age with early Alzheimer’s disease (mild cognitive impairment or mild dementia due to Alzheimer’s disease) with evidence of amyloid on positron-emission tomography (PET) or by cerebrospinal fluid testing. Participants were randomly assigned in a 1:1 ratio to receive intravenous lecanemab (10 mg per kilogram of body weight every 2 weeks) or placebo. The primary end point was the change from baseline at 18 months in the score on the Clinical Dementia Rating–Sum of Boxes (CDR-SB; range, 0 to 18, with higher scores indicating greater impairment). Key secondary end points were the change in amyloid burden on PET, the score on the 14-item cognitive subscale of the Alzheimer’s Disease Assessment Scale (ADAS-cog14; range, 0 to 90; higher scores indicate greater impairment), the Alzheimer’s Disease Composite Score (ADCOMS; range, 0 to 1.97; higher scores indicate greater impairment), and the score on the Alzheimer’s Disease Cooperative Study–Activities of Daily Living Scale for Mild Cognitive Impairment (ADCS-MCI-ADL; range, 0 to 53; lower scores indicate greater impairment).

RESULTS

A total of 1795 participants were enrolled, with 898 assigned to receive lecanemab and 897 to receive placebo. The mean CDR-SB score at baseline was approximately 3.2 in both groups. The adjusted least-squares mean change from baseline at 18 months was 1.21 with lecanemab and 1.66 with placebo (difference, −0.45; 95% confidence interval [CI], −0.67 to −0.23; P<0.001). In a substudy involving 698 participants, there were greater reductions in brain amyloid burden with lecanemab than with placebo (difference, −59.1 centiloids; 95% CI, −62.6 to −55.6). Other mean differences between the two groups in the change from baseline favoring lecanemab were as follows: for the ADAS-cog14 score, −1.44 (95% CI, −2.27 to −0.61; P<0.001); for the ADCOMS, −0.050 (95% CI, −0.074 to −0.027; P<0.001); and for the ADCS-MCI-ADL score, 2.0 (95% CI, 1.2 to 2.8; P<0.001). Lecanemab resulted in infusion-related reactions in 26.4% of the participants and amyloid-related imaging abnormalities with edema or effusions in 12.6%.

CONCLUSIONS

Lecanemab reduced markers of amyloid in early Alzheimer’s disease and resulted in moderately less decline on measures of cognition and function than placebo at 18 months but was associated with adverse events. Longer trials are warranted to determine the efficacy and safety of lecanemab in early Alzheimer’s disease. (Funded by Eisai and Biogen; Clarity AD ClinicalTrials.gov number, NCT03887455. opens in new tab.)

It’s a good study, and I (like everyone else) hope that it will mean tangible progress in the management of that devastating disease. Most media outlets are announcing the news with the claim that it is the FIRST TIME that any treatment has been shown to delay the cognitive decline of Alzheimer’s disease patients.

But is this true?

I think not!

There have been several studies showing that the herbal remedy GINKGO BILOBA slows the Alzheimer-related decline. Here is the latest systematic review of the subject:

Background: Ginkgo biloba is a natural medicine used for cognitive impairment and Alzheimer’s disease. The objective of this review is to explore the effectiveness and safety of Ginkgo biloba in treating mild cognitive impairment and Alzheimer’s disease.

Methods: Electronic search was conducted from PubMed, Cochrane Library, and four major Chinese databases from their inception up to 1(st) December, 2014 for randomized clinical trials on Ginkgo biloba in treating mild cognitive impairment or Alzheimer’s disease. Meta-analyses were performed by RevMan 5.2 software.

Results: 21 trials with 2608 patients met the inclusion criteria. The general methodological quality of included trials was moderate to poor. Compared with conventional medicine alone, Ginkgo biboba in combination with conventional medicine was superior in improving Mini-Mental State Examination (MMSE) scores at 24 weeks for patients with Alzheimer’s disease (MD 2.39, 95% CI 1.28 to 3.50, P<0.0001) and mild cognitive impairment (MD 1.90, 95% CI 1.41 to 2.39, P<0.00001), and Activity of Daily Living (ADL) scores at 24 weeks for Alzheimer’s disease (MD -3.72, 95% CI -5.68 to -1.76, P=0.0002). When compared with placebo or conventional medicine in individual trials, Ginkgo biboba demonstrated similar but inconsistent findings. Adverse events were mild.

Conclusion: Ginkgo biloba is potentially beneficial for the improvement of cognitive function, activities of daily living, and global clinical assessment in patients with mild cognitive impairment or Alzheimer’s disease. However, due to limited sample size, inconsistent findings and methodological quality of included trials, more research are warranted to confirm the effectiveness and safety of ginkgo biloba in treating mild cognitive impairment and Alzheimer’s disease.

I know, the science is not nearly as good as that of the NEJM trial. I also know that the trial data for ginkgo biloba are not uniformly positive. And I know that, after several studies showed good results, later trials tended not to confirm them.

But this is what very often happens in clinical research: studies are initially promising, only to be disappointing as more studies emerge. I sincerely hope that this will not happen with the new drug ‘Lecanemab’ and that today’s excitement will not turn out to be hype.

This double-blind, randomized study assessed the effectiveness of physiotherapy instrument mobilization (PIM) in patients with low back pain (LBP) and compared it with the effectiveness of manual mobilization.

Thirty-two participants with LBP were randomly assigned to one of two groups:

- The PIM group received lumbar mobilization using an activator instrument, stabilization exercises, and education.

- The manual group received lumbar mobilization using a pisiform grip, stabilization exercises, and education.

Both groups had 4 treatment sessions over 2-3 weeks. The following outcomes were measured before the intervention, and after the first and fourth sessions:

- Numeric Pain Rating Scale (NPRS),

- Oswestry Disability Index (ODI) scale,

- Pressure pain threshold (PPT),

- lumbar spine range of motion (ROM),

- lumbar multifidus muscle activation.

There were no differences between the PIM and manual groups in any outcome measures. However, over the period of study, there were improvements in both groups in NPRS (PIM: 3.23, Manual: 3.64 points), ODI (PIM: 17.34%, Manual: 14.23%), PPT (PIM: ⩽ 1.25, Manual: ⩽ 0.85 kg.cm2), lumbar spine ROM (PIM: ⩽ 9.49∘, Manual: ⩽ 0.88∘), and/or lumbar multifidus muscle activation (percentage thickness change: PIM: ⩽ 4.71, Manual: ⩽ 4.74 cm; activation ratio: PIM: ⩽ 1.17, Manual: ⩽ 1.15 cm).

The authors concluded that both methods of lumbar spine mobilization demonstrated comparable improvements in pain and disability in patients with LBP, with neither method exhibiting superiority over the other.

If this conclusion is meant to tell us that both treatments were equally effective, I beg to differ. The improvements documented here are consistent with improvements caused by the natural history of the condition, regression towards the mean, and placebo effects. The data do not prove that they are due to the treatments. On the contrary, they seem to imply that patients get better no matter what therapy is used. Thus, I feel that the results are entirely in keeping with the hypothesis that spinal mobilization is a placebo treatment.

So, allow me to re-phrase the authors’ conclusion as follows:

Lumbar mobilizations do not seem to have specific therapeutic effects and might therefore be considered to be ineffective for LBP.

Acupuncture is emerging as a potential therapy for relieving pain, but the effectiveness of acupuncture for relieving low back and/or pelvic pain (LBPP) during pregnancy remains controversial. This meta-analysis aimed to investigate the effects of acupuncture on pain, functional status, and quality of life for women with LBPP pain during pregnancy.

The authors included all RCTs evaluating the effects of acupuncture on LBPP during pregnancy. Data extraction and study quality assessments were independently performed by three reviewers. The mean differences (MDs) with 95% CIs for pooled data were calculated. The primary outcomes were pain, functional status, and quality of life. The secondary outcomes were overall effects (a questionnaire at a post-treatment visit within a week after the last treatment to determine the number of people who received good or excellent help), analgesic consumption, Apgar scores >7 at 5 min, adverse events, gestational age at birth, induction of labor and mode of birth.

Ten studies, reporting on a total of 1040 women, were included. Overall, acupuncture

- relieved pain during pregnancy (MD=1.70, 95% CI: (0.95 to 2.45), p<0.00001, I2=90%),

- improved functional status (MD=12.44, 95% CI: (3.32 to 21.55), p=0.007, I2=94%),

- improved quality of life (MD=−8.89, 95% CI: (−11.90 to –5.88), p<0.00001, I2 = 57%).

There was a significant difference in overall effects (OR=0.13, 95% CI: (0.07 to 0.23), p<0.00001, I2 = 7%). However, there was no significant difference in analgesic consumption during the study period (OR=2.49, 95% CI: (0.08 to 80.25), p=0.61, I2=61%) and Apgar scores of newborns (OR=1.02, 95% CI: (0.37 to 2.83), p=0.97, I2 = 0%). Preterm birth from acupuncture during the study period was reported in two studies. Although preterm contractions were reported in two studies, all infants were in good health at birth. In terms of gestational age at birth, induction of labor, and mode of birth, only one study reported the gestational age at birth (mean gestation 40 weeks).

The authors concluded that acupuncture significantly improved pain, functional status and quality of life in women with LBPP during the pregnancy. Additionally, acupuncture had no observable severe adverse influences on the newborns. More large-scale and well-designed RCTs are still needed to further confirm these results.

What should we make of this paper?

In case you are in a hurry: NOT A LOT!

In case you need more, here are a few points:

- many trials were of poor quality;

- there was evidence of publication bias;

- there was considerable heterogeneity within the studies.

The most important issue is one studiously avoided in the paper: the treatment of the control groups. One has to dig deep into this paper to find that the control groups could be treated with “other treatments, no intervention, and placebo acupuncture”. Trials comparing acupuncture combined plus other treatments with other treatments were also considered to be eligible. In other words, the analyses included studies that compared acupuncture to no treatment at all as well as studies that followed the infamous ‘A+Bversus B’ design. Seven studies used no intervention or standard of care in the control group thus not controlling for placebo effects.

Nobody can thus be in the slightest surprised that the overall result of the meta-analysis was positive – false positive, that is! And the worst is that this glaring limitation was not discussed as a feature that prevents firm conclusions.

Dishonest researchers?

Biased reviewers?

Incompetent editors?

Truly unbelievable!!!

In consideration of these points, let me rephrase the conclusions:

The well-documented placebo (and other non-specific) effects of aacupuncture improved pain, functional status and quality of life in women with LBPP during the pregnancy. Unsurprisingly, acupuncture had no observable severe adverse influences on the newborns. More large-scale and well-designed RCTs are not needed to further confirm these results.

PS

I find it exasperating to see that more and more (formerly) reputable journals are misleading us with such rubbish!!!

This study aimed to evaluate the number of craniosacral therapy sessions that can be helpful to obtain a resolution of the symptoms of infantile colic and to observe if there are any differences in the evolution obtained by the groups that received a different number of Craniosacral Therapy sessions at 24 days of treatment, compared with the control group which did not received any treatment.

Fifty-eight infants with colic were randomized into two groups:

- 29 babies in the control group received no treatment;

- babies in the experimental group received 1-3 sessions of craniosacral therapy (CST) until symptoms were resolved.

Evaluations were performed until day 24 of the study. Crying hours served as the primary outcome measure. The secondary outcome measures were the hours of sleep and the severity, measured by an Infantile Colic Severity Questionnaire (ICSQ).

Statistically significant differences were observed in favor of the experimental group compared to the control group on day 24 in all outcome measures:

- crying hours (mean difference = 2.94, at 95 %CI = 2.30-3.58; p < 0.001);

- hours of sleep (mean difference = 2.80; at 95 %CI = – 3.85 to – 1.73; p < 0.001);

- colic severity (mean difference = 17.24; at 95 %CI = 14.42-20.05; p < 0.001).

Also, the differences between the groups ≤ 2 CST sessions (n = 19), 3 CST sessions (n = 10), and control (n = 25) were statistically significant on day 24 of the treatment for crying, sleep and colic severity outcomes (p < 0.001).

The authors concluded that babies with infantile colic may obtain a complete resolution of symptoms on day 24 by receiving 2 or 3 CST sessions compared to the control group, which did not receive any treatment.

Why do SCAM researchers so often have no problem leaving the control group of patients in clinical trials without any treatment at all, while shying away from administering a placebo? Is it because they enjoy being the laughingstock of the science community? Probably not.

I suspect the reason might be that often they know that their treatments are placebos and that their trials would otherwise generate negative findings. Whatever the reasons, this new study demonstrates three things many of us already knew:

- Colic in babies always resolves on its own but can be helped by a placebo response (e.g. via the non-blinded parents), by holding the infant, and by paying attention to the child.

- Flawed trials lend themselves to drawing the wrong conclusions.

- Craniosacral therapy is not biologically plausible and most likely not effective beyond placebo.

The authors of this article searched 37 online sources, as well as print libraries, for homeopathy (HOM) and related terms in eight languages (1980 to March 2021). They included studies that compared a homeopathic medicine or intervention with a control regarding the therapeutic or preventive outcome of a disease (classified according to International Classification of Diseases-10). Subsequently, the data were extracted independently by two reviewers and analyzed descriptively.

A total of 636 investigations met the inclusion criteria, of which 541 had a therapeutic and 95 a preventive purpose. Seventy-three percent were randomized controlled trials (n = 463), whereas the rest were non-randomized studies (n = 173). The most frequently employed comparator was placebo (n = 400).

The type of homeopathic intervention was classified as:

- multi-constituent or complex (n = 272),

- classical or individualized (n = 176),

- routine or clinical (n = 161),

- isopathic (n = 19),

- various (n = 8).

The potencies ranged from 1X (dilution of -10,000) to 10 M (100–10.000). The included studies explored the effect of HOM in 223 different medical indications. The authors also present the evidence in an online database.

The authors concluded that this bibliography maps the status quo of clinical research in HOM. The data will serve for future targeted reviews, which may focus on the most studied conditions and/or homeopathic medicines, clinical impact, and the risk of bias of the included studies.

There are still skeptics who claim that no evidence exists for homeopathy. This paper proves them wrong. The number of studies may seem sizable to homeopaths, but compared to most other so-called alternative medicines (SCAMs), it is low. And compared to any conventional field of healthcare, it is truly tiny.

There are also those who claim that no rigorous trials of homeopathy with a positive results have ever emerged. This assumption is also erroneous. There are several such studies, but this paper was not aimed at identifying them. Obviously, the more important question is this: what does the totality of the methodologically sound evidence show? It fails to convincingly demonstrate that homeopathy has effects beyond placebo.

The present review was unquestionably a lot of tedious work, but it does not address these latter questions. It was published by known believers in homeopathy and sponsored by the Tiedemann Foundation for Classical Homeopathy, the Homeopathy Foundation of the Association of Homeopathic Doctors (DZVhÄ), both in Germany, and the Foundation of Homeopathy Pierre Schmidt and the Förderverein komplementärmedizinische Forschung, both in Switzerland.

The dataset established by this article will now almost certainly be used for numerous further analyses. I hope that this work will not be left to enthusiasts of homeopathy who have often demonstrated to be blinded by their own biases and are thus no longer taken seriously outside the realm of homeopathy. It would be much more productive, I feel, if independent scientists could tackle this task.

Guest post by Norbert Aust and Viktor Weisshäupl

Readers of this blog may remember the recent study of Frass et al. about the adjunct homeopathic treatment of patients suffering from non-small cell lung cancer (here). It was published in 2020 by the ‘Oncologist’, a respectable journal, and came to stunning results about to the effectiveness of homeopathy.

In our analysis, however, we found strong indications for duplicity: important study parameters like exclusion criteria or observation time were modified post hoc, and data showed characteristics that occur when unwanted data sets get removed.

We, that is the German Informationsnetzwerk Homöopathie and the Austrian ‘Initiative für wissenschaftliche Medizin’, had informed the Medical University Vienna about our findings – and the research director then asked the Austrian Agency for Scientific Integrity (OeAWI) to review the paper. The analysis took some time and included not only the paper and publicly available information but also the original data. In the end, OeAWI corroborated our findings: The results are not based on sound research but on modified or falsified data.

Here is their conclusion in full:

The committee concludes that there are numerous breaches of scientific integrity in the Study, as reported in the Publication. Several of the results can only be explained by data manipulation or falsification. The Publication is not a fair representation of the Study. The committee cannot for all the findings attribute the wrongdoings and incorrect representation to a single individual. However following our experience it is highly unlikely that the principal investigator and lead author, but also the co-authors were unaware of the discrepancies between the protocols and the Publication, for which they bear responsibility. (original English wording)

Profil, the leading news magazine of Austria reported in its issue of October 24, 2022, pp 58-61 (in German). There the lead author, Prof. M. Frass, a member of Edzard’s alternative medicine hall of fame, was asked for his comments. Here is his concluding statement:

All the allegations are known to us and completely incomprehensible, we can refute all of them. Our work was performed observing all scientific standards. The allegation of breaching scientific integrity is completely unwarranted. To us, it is evident that not all documents were included in the analysis of our study. Therefore we requested insight into the records to learn about the basis for the final statement.

(Die Vorwürfe sind uns alle bekannt und absolut unverständlich, alle können wir entkräften. Unsere Arbeit wurde unter Einhaltung aller wissenschaftlichen Standards durchgeführt. Der Vorhalt von Verstößen gegen die wissenschaftliche Intergrität enbehrt jeder Grundlage. Für uns zeigt sich offenkundig, dass bei der Begutachtung unserer Studie nicht alle Unterlagen miteinbezogen wurden. Aus diesem Grunde haben wir um Akteneinsicht gebeten, um die Grundlagen für das Final Statment kennenzulernen.)

The OeAWI together with the Medical University Vienna asked the ‘Oncologist’ for a retraction of this paper – which has not occurred as yet.