critical thinking

On this blog, we are often told that only a few chiros still believe in Palmer’s gospel of subluxation. This 2023 article seems to tell a different story.

The authors claim that the term demonstrates the widespread use and acceptance of the term subluxation and

acknowledges the broader chiropractic interpretation by recognition and adoption of the term outside the profession. In particular, it emphasizes the medical recognition supported by some of the medical evidence incorporating the

construct of a chiropractic vertebral subluxation complex and its utilization in practice.

The vertebral subluxation concept is similar to the terms spinal dysfunction, somatic dysfunction, segmental dysfunction or the vague vertebral lesion. These terms are primarily used by osteopaths, physiotherapists, and medical doctors to focus their manipulative techniques, but they relate primarily to the physical-mechanical aspects. In this respect, these terms are limited in what they signify. The implication of just plain osseous biomechanical dysfunction does not incorporate the wider ramifications of integrated neural, vascular, and internal associations which may involve greater ramifications, and should be more appropriately referred to as a vertebral subluxation complex (VSC).

The authors also claim that, in recognition of acceptance of the subluxation terminology, a 2015 study in North America found that a majority of the 7,455 chiropractic students surveyed agreed or strongly agreed (61.4%) that

the emphasis of chiropractic intervention in practice is to eliminate vertebral subluxations/vertebral subluxation complexes. A further 15.2% neutral, and only 23.3% disagreeing. It is suggested that ‘modulation’ of vertebral subluxations may have attracted an even higher rate of agreement.

The authors conclude that the evidence indicates that medicine, osteopathy, and physiotherapy have all

used the term ‘subluxation’ in the chiropractic sense. However, the more appropriate, and inclusive descriptive term of vertebral subluxation complex is widely adopted in chiropractic and the WHO ICD-10. It would be most incongruous for chiropractic to move away from using subluxation when it is so well established.

A move to deny clarity to the essence of chiropractic may well affect the public image of the profession. As Hart states ‘Identifying the chiropractic profession with a focus on vertebral subluxation would give the profession uniqueness not duplicated by other health care professions and, therefore, might legitimatise the existence of chiropractic as a health care profession. An identity having a focus on vertebral subluxation would also be consistent with the original intent of the founding of the chiropractic profession.’

The term ‘vertebral subluxation’ has been in general use and understanding in the chiropractic profession as is ‘chiropractic subluxation’ and ‘vertebral subluxation complex’ (VSC). It is a part of the profession’s heritage. Critics of concepts regarding subluxation offer no original evidence to support their case, and that appears to be just political opinion rather than providing evidence to substantiate their stand.

The evidence presented in this paper supports the contention that there would be no vertebrogenic symptoms associated with physiologically normal vertebral segments. The term designated by chiropractors to identify abnormal or pathophysiological segmental dysfunction is the vertebral subluxation. It has been a part of chiropractic heritage for over 120 years.

__________________________

Vis a vis such a diatribe of compact BS, I am tempted to point out that “critics of concepts regarding subluxation offer no original evidence to support their case” mainly because it is not they who have to produce the evidence. It is the chiropractic profession that needs to do that.

But they are evidently unable to do it.

Why?

Because chiropractic subluxation is a myth and an invention by their chief charlatan.

It is true that this fabrication is intimately linked to the identity of chiropractic.

It is furthermore true that chiros feel unable to throw it overboard because they would lose their identity.

What follows is simple:

Chiropractic is a fraud.

In this retrospective matched-cohort study, Chinese researchers investigated the association of acupuncture treatment for insomnia with the risk of dementia. They collected data from the National Health Insurance Research Database (NHIRD) of Taiwan to analyze the incidence of dementia in patients with insomnia who received acupuncture treatment.

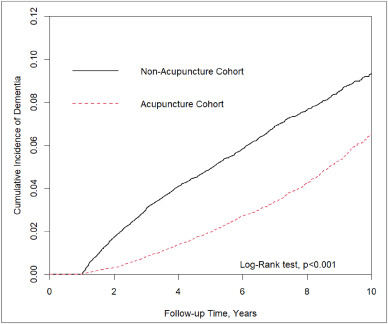

The study included 152,585 patients, selected from the NHIRD, who were newly diagnosed with insomnia between 2000 and 2010. The follow-up period ranged from the index date to the date of dementia diagnosis, date of withdrawal from the insurance program, or December 31, 2013. A 1:1 propensity score method was used to match an equal number of patients (N = 18,782) in the acupuncture and non-acupuncture cohorts. The researchers employed Cox proportional hazards models to evaluate the risk of dementia. The cumulative incidence of dementia in both cohorts was estimated using the Kaplan–Meier method, and the difference between them was assessed through a log-rank test.

Patients with insomnia who received acupuncture treatment were observed to have a lower risk of dementia (adjusted hazard ratio = 0.54, 95% confidence interval = 0.50–0.60) than those who did not undergo acupuncture treatment. The cumulative incidence of dementia was significantly lower in the acupuncture cohort than in the non-acupuncture cohort (log-rank test, p < 0.001).

The researchers concluded that acupuncture treatment significantly reduced or slowed the development of dementia in patients with insomnia.

They could be correct, of course. But, then again, they might not be. Nobody can tell!

As many who are reading these lines know: CORRELATION IS NOT CAUSATION.

But if acupuncture was not the cause for the observed differences, what could it be? After all, the authors used clever statistics to make sure the two groups were comparable!

The problem here is, of course, that they can only make the groups comparable for variables that were measured. These were about 20 parameters mostly related to medication intake and concomitant diseases. This leaves a few hundred potentially relevant variables that were not quantified and could thus not be accounted for.

My bet would be lifestyle: it is conceivable that the acupuncture group had acupuncture because they were generally more health-conscious. Living a relatively healthy life might reduce the dementia risk entirely unrelated to acupuncture. According to Occam’s razor, this explanation is miles more likely that the one about acupuncture.

So, what this study really demonstrates or implies is, I think, this:

- The propensity score method can never be perfect in generating completely comparable groups.

- The JTCM publishes rubbish.

- Correlation is not causation.

- To establish causation in clinical medicine, RCTs are usually the best option.

- Occam’s razor can be useful when interpreting research findings.

Kratom (Mitragyna speciosa) belongs to the coffee family. It’s found in Southeast Asia and Africa. Traditionally, people have:

- Chewed kratom leaves.

- Made kratom tea to fight tiredness and improve productivity.

- Used kratom as medicine.

- Substituted kratom for opium.

- Used kratom during religious ceremonies.

Low doses of kratom can make you more alert, and higher doses can cause:

- Decreased pain.

- Pleasure.

- Sedation.

The mechanism of action seems to be that two of the compounds in kratom (mitragynine and 7-hydroxymitragynine) interact with opioid receptors in your brain.

Kratom is thus being promoted as a pain remedy that is safer than traditional opioids, an effective addiction withdrawal aid, and a pleasurable recreational tonic. But kratom is, in fact, a dangerous and unregulated drug that can be purchased on the Internet, a habit-forming substance that authorities say can result in opioid-like abuse and death.

The Food and Drug Administration (FDA) warned that kratom possesses the properties of an opioid, thus escalating the government’s effort to slow the usage of this alternative pain reliever. The FDA stated that the number of deaths associated with kratom use has increased. Now further concerns have emerged.

This review enumerates seven outbreaks of kratom (Mitragyna speciosa) product adulteration and contamination in the context of the United States Dietary Supplement Health and Education Act (DSHEA).

At least seven distinct episodes of kratom product contamination or adulteration are known:

- (1) krypton, a kratom product adulterated with O-desmethyltramadol that resulted in at least nine fatal poisonings;

- (2) a suspected case of kratom contamination with hydrocodone and morphine;

- (3) a case of kratom adulteration with phenylethylamine;

- (4) contamination of multiple kratom products with heavy metals;

- (5) contamination of kratom products by multiple Salmonella enterica serotypes;

- (6) exposure of federal agents raiding a synthetic cannabinoid laboratory to kratom alkaloids;

- (7) suspected kratom product adulteration with exogenous 7-hydroxymitragynine.

The authors concluded that inadequate supplement regulation contributed to multiple examples of kratom contamination and adulteration, illustrating the potential for future such episodes involving kratom and other herbal supplements.

I had all but forgotten about these trials until a comment by ‘Mojo’ (thanks Mojo!) reminded me of this article in the JRSM by M.E. Dean. It reviewed these early trials of homeopathy back in 2006. Here are the crucial excerpts:

The homeopath in both trials was a Dr Herrmann, who received a 1-year contract in February 1829 to test homeopathy with the Russian military.3 The first study took place at the Military Hospital in the market town of Tulzyn, in the province of Podolya, Ukraine.4 At the end of 3 months, 164 patients had been admitted, 123 pronounced cured, 18 were convalescing, 18 still sick, and six had died. The homeopathic ward received many gravely ill patients, and the small number of deaths was shown at autopsy to be due to advanced gross pathologies. The results were interesting enough for the Russian government to order Herrmann to the Regional Military Hospital at St Petersburg to take part in a larger trial, supervised by a Dr Gigler. Patients were admitted to an experimental homeopathic ward, for treatment by Herrmann, and comparisons were made with the success rate in the allopathic wards, as happened in Tulzyn. The novelty was Gigler’s inclusion of a ‘no treatment’ ward where patients were not subject to conventional drugging and bleeding, or homeopathic dosing. The untreated patients benefited from baths, tisanes, good nutrition and rest, but also:

‘During this period, the patients were additionally subjects of an innocent deception. In order to deflect the suspicion that they were not being given any medicine, they were prescribed pills made of white breadcrumbs or cocoa, lactose powder or salep infusions, as happened in the homeopathic ward.’3 (page 415)

The ‘no treatment’ patients, in fact, did better than those in both the allopathic and homeopathic wards. The trial had important implications not just for homeopathy but also for the excessive allopathic drugging and bleeding that was prevalent. As a result of the report, homeopathy was banned in Russia for some years, although allopathy was not.

… A well-known opponent of homeopathy, Carl von Seidlitz, witnessed the St Petersburg trial and wrote a hostile report.5 He then conducted a homeopathic drug test in February 1834 at the Naval Hospital in the same city in which healthy nursing staff received homeopathically-prepared vegetable charcoal or placebo in a single-blind cross-over design.6 Within a few months, Armand Trousseau and colleagues were giving placebo pills to their Parisian patients; perhaps in the belief that they were testing homeopathy, and fully aware they were testing a placebo response.7,8 A placebo-controlled homeopathic proving took place in Nuremberg in 1835 and even included a primitive form of random assignment—identical vials of active and placebo treatment were shuffled before distribution.9 Around the same time in England, Sir John Forbes treated a diarrhoea outbreak after dividing his patients into two groups: half received allopathic ‘treatment as usual’ and half got bread pills. He saw no difference in outcome, and when he reported the experiment in 1846 he added that the placebos could just as easily have been homeopathic tablets.10 In 1861, a French doctor gave placebo pills to patients with neurotic symptoms, and his attitude is representative: he called the placebo ‘orthodox homeopathy’, because, as he said, ‘Bread pills or globules of Aconitum 30c or 40c amount to the same thing’.11

References:

Kourtney Kardashian believes that vaginal health is an important but not sufficiently talked about part of women’s well-being. So, why not make a bit of money on the subject? A recent article explains in more detail:

The reality TV star recently launched a vitamin sweet called Lemme Purr to boost the health of your vagina. On her Instagram channel, she says these gummies use pineapple, vitamin C, and probiotics to target vaginal health and pH levels that “support freshness and taste”.

Kourtney continues with the selling words “Give your vagina the sweet treat it deserves (and turn it into a sweet treat)”. One of the claims she makes is that the vitamin sweet supports a healthy vaginal microflora. As a researcher specialising in the role of vaginal microflora for women’s health, I was curious and wanted to find out which active ingredients this claim is based on.

Lemme Purr contains pineapple extract (probably for its taste), vitamin C (not really needed if you have a balanced diet), and a clinically tested probiotic (Bacillus coagulans). According to the product description, the probiotic has been shown in clinical studies to support vaginal health, freshness, and odour. This surprised me – I should know about these studies and effects as this is my primary research field.

A healthy vaginal microflora is composed of lactobacilli that keep the pH low and protect us from infections. My colleagues and I never identified Bacillus coagulans as being important for the health of vaginas, even though we have analysed thousands of samples during recent years. From other research groups and our own results, we know that Lactobacillus crispatus is the species that is associated with vaginal health and female fertility.

As I may have missed something important, I immediately checked what has been published on that probiotic in scientific journals. I found one systematic review and meta-analysis (a type of analysis where many individual studies are taken together) that mentions Bacillus coagulans. Apparently, it may improve stool frequency and symptoms of constipation, although the authors conclude that more research is needed.

On the topic of women’s vaginal health, I could only find a single study. There, 70 women with vaginal discomfort reported symptom relief after direct vaginal administration of the probiotic. There is nothing published on the oral administration of the probiotic that could support the claims made by Kourtney.

__________________________

I was not entirely sure where women are supposed to put Kourtney’s gummies. So, I watched a video where Kourtney applies one of these items herself. I am very pleased to report that, in the video, she put one in her mouth!

After this relief, I ran a few Medline searches to get an impression of what the evidence tells us. In contrast to the author of the above article, I found plenty of literature on the subject and quite a few clinical trials. So, maybe Kourtney is on to something?

Somehow, I doubt it. I did not find a study with her product. Call me a skeptic, but I do get the feeling after looking at Kourtney’s website that she is much more interested in money than vaginal health.

A recent article in ‘The Lancet Regional Health‘ emphasized the “need for reimagining India’s health system and the importance of an inclusive approach for Universal Health Coverage” by employing traditional medicine, including homeopathy. This prompted a response by Siddhesh Zadey that I consider worthy of reproducing here in abbreviated form:

… Since the first trial conducted in 1835 that questioned homeopathy’s efficacy, multiple randomized controlled trials (RCTs) and other studies compiled in several systematic reviews and meta-analyses have shown that there is no reliable and clinically significant effect of non-individualized or individualized homeopathic treatments across disease conditions ranging from irritable bowel syndrome in adults to acute respiratory tract infections in children when compared to placebo or other treatments. Even reviews that support homeopathy’s efficacy consistently caution about low quality of evidence and raise questions on its clinical use. The most recent analysis of reporting bias in homeopathic trials depicted problematic trial conduction practices that further obscure reliability and validity of evidence. Homeopathic treatments have also been linked to aggravations and non-fatal and fatal adverse events.

The Lancet has previously published on another kind of harm that uptake of homeopathy encourages in India: delay to evidence-based clinical care that can lead to fatality. Authors have pointed out that evidence for some of the alternative systems of medicine may not come from RCTs. I agree that more appropriate study designs and analytical techniques are needed for carefully studying individualized treatment paradigms. However, the need for agreement on some consistent form of evidence synthesis and empirical testing across diverse disciplines cannot be discounted. Several other disciplines including psychology, economics, community health, implementation science, and public policy have adopted RCTs and related study designs and have passed the empirical tests of efficacy. Moreover, the ideas around mechanism of action in case of homeopathy still remain controversial and lack evidence after over a century. On the contrary, biochemical, molecular, and physiological mechanistic evidence supporting allopathic treatments has grown abundantly in the same period.

Owing to lack of evidence on its efficacy and safety, the World Health Organization had previously warned against the use of homeopathic treatments for severe diseases. Additionally, multiple countries, including Germany where the practice originated, have initiated mechanisms that discourage uptake of homeopathy while others are considering banning it. Homeopathy doesn’t work, could be harmful, and is not a part of Indian traditional medicine. While we should welcome pluralistic approaches towards UHC, we need to drop homeopathy.

(for references, see original text)

___________________

Yes, in the name of progress and in the interest of patients, “we need to drop homeopathy” (not just in India but everywhere). I quite agree!

In my very small way, I tried to issue challenges to those who believe in unbelievable stuff before, e.g.:

- A CHALLENGE FOR ALL HOMEOPATHS OF THE WORLD

- My new challenge to the ‘defenders of the homeopathic realm’: name treatments that are as useless as homeopathy

- My challenge to the homeopaths of this world

- Exercise improves cognitive function + a challenge to fans of alternative medicine

- MORE GOOD THAN HARM? I herewith challenge my critics

But I never had any success; no contenders ever came forward. One reason was, of course, that I did not offer much by way of an award. So, in case you have been waiting for the big one, the one to get rich by, this is your chance:

The Los Angeles-based Center for Inquiry Investigations Group (CFIIG) $250,000 Paranormal Challenge is the largest prize of its kind in the world—or at least it was.

It has been announced that the science-based skeptics’ organization has now raised the stakes for those making wild claims about extraordinary powers, doubling the prize offer to $500,000 for anyone who can demonstrate paranormal abilities under scientific test conditions.

“A quarter-million dollars just doesn’t go as far as it used to, apparently,” said CFIIG founder and chairman James Underdown. “This is our way of creating extra incentive for people who make farfetched claims to put up or shut up.”

CFIIG has been offering money for definitive proof of “superpowers” for more than twenty-three years; the Paranormal Challenge was modeled after the James Randi Educational Organization’s Million Dollar Challenge, which ceased operations in 2016.

Underdown says CFIIG typically receives more than 100 applications and administers roughly half a dozen tests each year. Among those who’ve been tested are self-proclaimed telepaths, dowsers, clairaudients, healers, remote viewers, and telekinetics. To date, no applicant has ever passed even the first portion of a test, and the prize money has never been claimed.

Underdown believes this should come as no surprise. “We don’t anticipate awarding this money, because we watch these folks pretty closely,” Underdown says. “Science recognizes neither the paranormal nor the supernatural. Anyone with the ability to provably demonstrate why it should would have certainly earned the prize by now.”

Anyone interested in applying for CFIIG’s Paranormal Challenge may apply online to begin the process. Applicants must pass a two-part test of their alleged ability. The tests must be performed in a controlled environment to prevent trickery, and any expenses incurred during testing must be borne by the applicant.

________________

How about it?

Don’t you feel tempted?

Dana?

Heinrich?

Anyone?

This review investigated the characteristics, hotspots, and frontiers of global scientific output in acupuncture research for chronic pain over the past decade. the authors retrieved publications on acupuncture for chronic pain published from 2011 to 2022 from the Science Citation Index Expanded (SCI-expanded) of the Web of Science Core Collection (WoSCC). The co-occurrence relationships of journals/countries/institutions/authors/keywords were performed using VOSviewer V6.1.2, and CiteSpace V1.6.18 analyzed the clustering and burst analysis of keywords and co-cited references.

A total of 1616 articles were retrieved. The results showed that:

- the number of annual publications on acupuncture for chronic pain has increased over time;

- the main types of literature are original articles (1091 articles, 67.5 %) and review articles (351 articles, 21.7 %);

- China had the most publications (598 articles, 37 %), with Beijing University of Traditional Chinese Medicine (93 articles, 5.8 %);

- Evidence-based Complementary and Alternative Medicine ranked first (169 articles, 10.45 %) as the most prolific affiliate and journal, respectively;

- Liang FR was the most productive author (43 articles);

- the article published by Vickers Andrew J in 2012 had the highest number of citations (625 citations).

Recently, “acupuncture” and “pain” appeared most frequently. The hot topics in acupuncture for chronic pain based on keywords clustering analysis were experimental design, hot diseases, interventions, and mechanism studies. According to burst analysis, the main research frontiers were functional connectivity (FC), depression, and risk.

The authors concluded that this study provides an in-depth perspective on acupuncture for chronic pain studies, revealing pivotal points, research hotspots, and research trends. Valuable ideas are provided for future research activities.

I might disagree with the authors’ conclusion and would argue that they have demonstrated that:

- the acupuncture literature is dominated by China, which is concerning because we know that 1) these studies are of poor quality, 2) never report negative findings, and 3) are often fabricated;

- the articles tend to be published in journals that are more than a little suspect.

As we have seen recently, the reliable evidence that acupuncture remains effective is wafer-thin. Therefore, I feel that we are currently being misled by a flurry of rubbish publications that have one main aim: to distract from the fact that acupuncture might be nonsense.

Yes, this post is yet again about the harm chiropractors do.

No, I am not obsessed with the subject – I merely consider it to be important.

This is a case presentation of a 44-year-old male who was transferred from another emergency department for left homonymous inferior quadrantanopia noted on an optometrist visit. He reported sudden onset left homonymous hemianopia after receiving a high-velocity cervical spine adjustment at a chiropractor appointment for chronic neck pain a few days prior.

The CT angiogram of the head and neck revealed bilateral vertebral artery dissection at the left V2 and right V3 segments. MRI brain confirmed an acute infarct in the right medial occipital lobe. His right PCA stroke was likely embolic from the injured right V3 but possibly from the left V2 as well. As the patient reported progression from a homonymous hemianopia to a quadrantanopia, he likely had a migrating embolus.

The authors discussed that arterial dissection accounts for about 2% of all ischemic strokes, but maybe between 8–25% in patients less than 45 years old. Vertebral artery dissection (VAD) can result from trauma from sports, motor vehicle accidents, and chiropractor neck manipulations to violent coughing/sneezing.

It is estimated that 1 in 20,000 spinal manipulation results in vertebral artery aneurysm/dissection. Patients who have multiple chronic conditions are reporting higher use of so-called alternative medicine (SCAM), including chiropractic manipulation. Education about the association between VAD and chiropractor maneuvers can be beneficial to the public as these are preventable acute ischemic strokes. In addition, VAD symptoms can be subtle and patients presenting to chiropractors may have distracting pain masking their deficits. Evaluating for appropriateness of cervical manipulation in high‐risk patients and detecting early clinical signs of VAD by chiropractors can be beneficial in preventing acute ischemic strokes in young patients.

Here we have a rare instance where the physicians who treated the chiro-victim were sufficiently motivated to present their findings and document them in the medical literature. Their report was published in 2021 as an abstract in conference proceedings. In other words, the report is not easy to find. Even though two years have passed, the full article does not seem to have emerged, and chances are that it will never be published.

The points I am trying to make are as follows:

- Complications after chiropractic manipulation do happen and are probably much more frequent than chiros want us to believe.

- They are only rarely reported in the medical literature because the busy clinicians who end up treating the victims do not consider this a priority and because many cases are settled in or out of court.

- Normally, it would be the ethical/moral duty of the chiros who have inflicted the damage to do the reporting.

- Yet, they seem too busy ripping off more patients by doing neck manipulations that do more harm than good.

- And then they complain that the evidence is insufficient!!!

I have featured the ‘Münster Circle‘ before. The reason why I do it again today is that we have just published a new Memorandum entitled HOMEOPATHY IN THE PHARMACY. Here is its summary which I translated into English:

Due to questionable regulations in German pharmaceutical law, homeopathic medicines can be given the status of a medicinal product without having to provide valid proof of efficacy. As medicinal products, these preparations may then only be dispensed to customers in pharmacies, which, however, creates an obligation to also supply them on request or prescription. Many pharmacies go far beyond this and advertise homeopathic medicines as a useful therapy option by advertising them prominently in the window. In addition, customers are recommended to use them, corresponding lecture events are supported, and much more. Often, homeopathic preparations are even produced according to pharmacies’ own formulations and marketed under their own name.

For pharmacists and pharmaceutical technical assistants (PTAs) to perform their important task in the proper supply of medicines to the population, they must have successfully completed a scientific study of pharmacy or state-regulated training. This is to ensure that customers are informed and properly advised about their medicines according to the current state of knowledge.

After successfully completing their training or studies, PTAs and pharmacists are undoubtedly able to recognize that homeopathic medicines cannot be effective beyond placebo. They do not have any significant content of active ingredients – if, for example, the high potencies that are considered to be particularly effective still have any active ingredients at all. Consequently, pharmacists and PTAs act against their better knowledge to the detriment of their customers if they create the impression through their actions that homeopathic medicines represent a sensible therapeutic option and customers are thereby encouraged to buy and use them.

Although homeopathics have no potential for direct harm in the absence of relevant amounts of pharmacologically active substances in the preparations, their distribution should nevertheless be viewed critically. The use of homeopathy can mean losing valuable time and delaying the start of effective therapy. It is often accompanied by criticism, even rejection of scientifically oriented medicine and public health, for example when homeopathy is presented as the antithesis to a threatening “pharmaceutical mafia”.

The Münster Circle appeals to pharmacists and PTAs to stop advertising homeopathic medicines as an effective therapeutic option, to stop producing and marketing them themselves, and to advise their customers that homeopathic preparations are not more effective than placebo. The professional organizations of pharmacists and other providers of further training are called upon to no longer offer courses on homeopathy – except for convincingly refuting the often abstruse claims of the supporters.

_______________________

I have pointed out for at least 20 years now that pharmacists have an ethical duty toward their clients. And this duty does not involve misleading them and selling them useless homeopathic remedies. On the contrary, it involves advising them on the basis of the best existing evidence.

When I started writing and talking about this, pharmacists seemed quite interested (or perhaps just amused?). They invited me to give lectures, I published an entire series of articles in the PJ, etc. Of late, they seem to be fed up with hearing this message and the invitations have well and truly stopped.

They may be frustrated with my message – but not as frustrated as I am with their inertia. In my view, it is nothing short of a scandal that homeopathic remedies and similarly bogus treatments still feature in pharmacies across the globe.