clinical trial

Kratom (Mitragyna speciosa, Korth.) is an evergreen tree that is indigenous to Southeast Asia. It is increasingly being used as a recreational drug, to help with opium withdrawal, and as a so-called alternative medicine (SCAM) for pain, erectile dysfunction, as a mood stabilizer, and for boosting energy or concentration. When ingested, Kratom leaves produce stimulant and opioid-like effects (see also my previous post).

Kratom contains 7‑hydroxymitragynine, which is active on opioid receptors. The use of kratom carries significant risks, e.g. because there is no standardized form of administration as well as the possibility of direct damage to health and of addiction.

There are only very few clinical trials of Kratom. One small placebo-controlled study concluded that the short-term administration of the herb led to a substantial and statistically significant increase in pain tolerance. And a recent review stated that Kratom may have drug interactions as both a cytochrome P-450 system substrate and inhibitor. Kratom does not appear in normal drug screens and, especially when ingested with other substances of abuse, may not be recognized as an agent of harm. There are numerous cases of death in kratom users, but many involved polypharmaceutical ingestions. There are assessments where people have been unable to stop using kratom therapy and withdrawal signs/symptoms occurred in patients or their newborn babies after kratom cessation. Both banning and failure to ban kratom places people at risk; a middle-ground alternative, placing it behind the pharmacy counter, might be useful.

In Thailand, Kratom had been outlawed since 1943 but now it has become (semi-)legal. Earlier this year, the Thai government removed the herb from the list of Category V narcotics. Following this move, some 12,000 inmates who had been convicted when Kratom was still an illegal drug received amnesty. However, Kratom producers, traders, and even researchers will still require licenses to handle the plant. Similarly, patients looking for kratom-based supplements will need a valid prescription from licensed medical practitioners. Thai law still prohibits bulk possession of Kratom. Users are encouraged to handle only minimum amounts of the herb to avoid getting prosecuted for illegal possession.

In 2018, the US Food and Drug Administration stated that Kratom possesses the properties of an opioid, thus escalating the government’s effort to slow usage of this alternative pain reliever. The FDA also wrote that the number of deaths associated with Kratom use has increased to a total of 44, up from a total of 36 since the FDA’s November 2017 report. In the majority of deaths that the FDA attributes to Kratom, subjects ingested multiple substances with known risks, including alcohol.

In most European countries, Kratom continues to be a controlled drug. In the UK the sale, import, and export of Kratom are prohibited. Yet, judging from a quick look, it does not seem to be all that difficult to obtain Kratom via the Internet.

The global market for dietary supplements has grown continuously during the past years. In 2019, it amounted to around US$ 353 billion. The pandemic led to a further significant boost in sales. Evidently, many consumers listened to the sly promotion by the supplement industry. Thus they began to be convinced that supplements might stimulate their immune system and thus protect them against COVID-19 infections.

During the pre-pandemic years, the US sales figures had typically increased by about 5% year on year. In 2020, the increase amounted to a staggering 44 % (US$435 million) during the six weeks preceding April 5th, 2020 relative to the same period in 2019. The demand for multivitamins in the US reached a peak in March 2020 when sales figures had risen by 51.2 %. Total sales of vitamins and other supplements amounted to almost 120 million units for that period alone. In the UK, vitamin sales increased by 63 % and, in France, sales grew by around 40–60 % in March 2020 compared to the same period of the previous year.

Vis a vis such impressive sales figures, one should ask whether dietary supplements really do produce the benefit that consumers hope for. More precisely, is there any sound evidence that these supplements protect us from getting infected by COVID-19? In an attempt to answer this question, I conducted several Medline searches. Here are the conclusions of the relevant clinical trials and systematic reviews that I thus found:

- KSK (a polyherbal formulation from India’s Siddha system of medicine) significantly reduced SARS-CoV-2 viral load among asymptomatic COVID-19 cases and did not record any adverse effect, indicating the use of KSK in the strategy against COVID-19. Larger, multi-centric trials can strengthen the current findings.

- There is currently insufficient evidence to determine the benefits and harms of vitamin D supplementation as a treatment of COVID-19.

- Herbal supplements may help patients with COVID-19, zinc sulfate is likely to shorten the duration of olfactory dysfunction. DS therapy and herbal medicine appear to be safe and effective adjuvant therapies for patients with COVID-19. These results must be interpreted with caution due to the overall low quality of the included trials. More well-designed RCTs are needed in the future.

- No significant difference with vitamin-D supplementation on major health related outcomes in COVID-19.

- there is not enough evidence on the association between individual zinc status and COVID-19 infections and mortality.

- Omega-3 supplementation improved the levels of several parameters of respiratory and renal function in critically ill patients with COVID-19.

- A 5000 IU daily oral vitamin D3 supplementation for 2 weeks reduces the time to recovery for cough and gustatory sensory loss among patients with sub-optimal vitamin D status and mild to moderate COVID-19 symptoms. The use of 5000 IU vitamin D3 as an adjuvant therapy for COVID-19 patients with suboptimal vitamin D status, even for a short duration, is recommended.

- In this 2-sample MR study, we did not observe evidence to support an association between 25OHD levels and COVID-19 susceptibility, severity, or hospitalization. Hence, vitamin D supplementation as a means of protecting against worsened COVID-19 outcomes is not supported by genetic evidence.

- These antiviral and immune-modulating activities and their ability to stimulate interferon production recommend the use of probiotics as an adjunctive therapy to prevent COVID-19. Based on this extensive review of RCTs we suggest that probiotics are a rational complementary treatment for RTI diseases and a viable option to support faster recovery.

- In this randomized clinical trial of ambulatory patients diagnosed with SARS-CoV-2 infection, treatment with high-dose zinc gluconate, ascorbic acid, or a combination of the 2 supplements did not significantly decrease the duration of symptoms compared with standard of care.

- These findings neither support nor refute the claim that 3M3F alters the severity of COVID-19 or alleviates symptoms. More rigorous studies are required to properly ascertain the potential role of Chinese Herbal Medicine in COVID-19.

- NSO (Nigella sativa oil) supplementation was associated with faster recovery of symptoms than usual care alone for patients with mild COVID-19 infection. These potential therapeutic benefits require further exploration with placebo-controlled, double-blinded studies.

- The clinical application of LQ (Lianhua Qingwen Granules or Capsules ) on the treatment of COVID-19 has significant efficacy in improving clinical symptoms and reducing the rate of clinical change to severe or critical condition. Nevertheless, due to the limited quantity and quality of the included studies, more and higher quality trials with more observational indicators are expected to be published.

- The study identified some important potential traditional Indian medicinal herbs such as Ocimum tenuiflorum, Tinospora cordifolia, Achyranthes bidentata, Cinnamomum cassia, Cydonia oblonga, Embelin ribes, Justicia adhatoda, Momordica charantia, Withania somnifera, Zingiber officinale, Camphor, and Kabusura kudineer, which could be used in therapeutic strategies against SARS-CoV-2 infection.

- Shenhuang Granule is a promising integrative therapy for severe and critical COVID-19.

- Low-certainty or very low-certainty evidence demonstrated that oral CPM (Chinese patent medicine) may have add-on potential therapeutic effects for patients with non-serious COVID-19. These findings need to be further confirmed by well-designed clinical trials with adequate sample sizes.

- XYP (Xiyanping) injection is safe and effective in improving the recovery of patients with mild to moderate COVID-19. However, further studies are warranted to evaluate the efficacy of XYP in an expanded cohort comprising COVID-19 patients at different disease stages.

- Our meta-analysis of RCTs indicated that LH (Lianhuaqingwen) in combination with usual treatment may improve the clinical efficacy in patients with mild or moderate COVID-19 without increasing adverse events. However, given the limitations and poor quality of included trials in this study, further large-sample RCTs or high-quality real-world studies are needed to confirm our conclusions.

- Reduning injection might be effective and safe in patients with symptomatic COVID-19.

- In light of the safety and effectiveness profiles, LH (Lianhuaqingwen) capsules could be considered to ameliorate clinical symptoms of Covid-19.

- QPT (Qingfei Paidu Tang) was associated with a substantially lower risk of in-hospital mortality, without extra risk of acute liver injury or acute kidney injury among patients hospitalized with COVID-19.

- This community-based RCT found that the use of a herbal medicine therapy (Jinhaoartemisia antipyretic granules and Huoxiangzhengqi oral liquids) could significantly reduce the risks of the common cold among community-dwelling residents, suggesting that herbal medicine may be a useful approach for public health intervention to minimize preventable morbidity during COVID-19 outbreak.

- Based on unresolved controversies and inconclusive findings, it could be said that generally, a single and specific therapeutics to COVID-19 is still a mirage.

- Keguan-1-based integrative therapy was safe and superior to the standard therapy in suppressing the development of ARDS in COVID-19 patients.

Confused?

Me too!

Does the evidence justify the boom in sales of dietary supplements?

More specifically, is there good evidence that the products the US supplement industry is selling protect us against COVID-19 infections?

No, I don’t think so.

So, what precisely is behind the recent sales boom?

It surely is the claim that supplements protect us from Covid-19 which is being promoted in many different ways by the industry. In other words, we are being taken for a (very expensive) ride.

Exploring preventive therapeutic measures has been among the biggest challenges during the coronavirus disease 2019 (COVID-19) pandemic caused by severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2). A team of Indian and US researchers explored the feasibility and methods of recruitment, retention, and potential signal of efficacy, of selected homeopathic medicines as a preventive measure for developing COVID-19 in a multi-group study.

A six-group, randomized, double-blind, placebo-controlled prophylaxis study was conducted in a COVID-19 exposed population in a quarantine facility in Mumbai, India. Each group received one of the following:

- Arsenicum album 30c,

- Bryonia alba 30c,

- Arsenicum album 30c, Bryonia alba 30c, Gelsemium sempervirens 30c, and Influenzinum 30c

- coronavirus nosode CVN01 30c,

- Camphora 1M,

- placebo.

Six pills twice a day were administered for 3 days. The primary outcome measure used was testing recruitment and retention in this quarantined setting. Secondary outcomes were numbers testing positive for COVID-19 after developing symptoms of illness, the number of subjects hospitalized, and days to recovery.

Good rates of recruitment and retention were achieved. Of 4,497 quarantined individuals, 2,343 sought enrollment, with 2,294 enrolled and 2,233 completing the trial (49.7% recruitment, 97.3% retention). Subjects who were randomized to either Bryonia alba (group 2) or to the CVN01 nosode (group 4) signaled a numerically lower incidence of laboratory-confirmed COVID-19 and a shorter period of illness, with evidence of fewer hospitalizations than those taking placebo. The three other groups did not show signals of efficacy.

The authors concluded that this pilot study supports the feasibility of a larger randomized, double-blind, placebo-controlled trial. Bryonia alba 30c and CVN01 30c should both be explored in disease prevention or shortening the course of disease symptomatology in a COVID-19-exposed population.

Signals of efficacy?

Are they kidding us?

The results failed to be statistically significant!

Hence the conclusions should be rewritten as follows:

This pilot study supports the feasibility of a larger trial in India where people have been told by an irresponsible government to believe in homeopathy. None of the 5 homeopathic treatments generated encouraging findings and none should be explored further. Studies of this nature must be discouraged firstly because homeopaths would not accept the findings of a trial of non-individualized homeopathy, and secondly because such trials will further confuse the public who might think that homeopathy is worth trying.

Kneipp therapy goes back to Sebastian Kneipp (1821-1897), a catholic priest who was convinced to have cured himself of tuberculosis by using various hydrotherapies. Kneipp is often considered by many to be ‘the father of naturopathy’. Kneipp therapy consists of hydrotherapy, exercise therapy, nutritional therapy, phototherapy, and ‘order’ therapy (or balance). Kneipp therapy remains popular in Germany where whole spa towns live off this concept.

The obvious question is: does Kneipp therapy work? A team of German investigators has tried to answer it. For this purpose, they conducted a systematic review to evaluate the available evidence on the effect of Kneipp therapy.

A total of 25 sources, including 14 controlled studies (13 of which were randomized), were included. The authors considered almost any type of study, regardless of whether it was a published or unpublished, a controlled or uncontrolled trial. According to EPHPP-QAT, 3 studies were rated as “strong,” 13 as “moderate” and 9 as “weak.” Nine (64%) of the controlled studies reported significant improvements after Kneipp therapy in a between-group comparison in the following conditions:

- chronic venous insufficiency,

- hypertension,

- mild heart failure,

- menopausal complaints,

- sleep disorders in different patient collectives,

- as well as improved immune parameters in healthy subjects.

No significant effects were found in:

- depression and anxiety in breast cancer patients with climacteric complaints,

- quality of life in post-polio syndrome,

- disease-related polyneuropathic complaints,

- the incidence of cold episodes in children.

Eleven uncontrolled studies reported improvements in allergic symptoms, dyspepsia, quality of life, heart rate variability, infections, hypertension, well-being, pain, and polyneuropathic complaints.

The authors concluded that Kneipp therapy seems to be beneficial for numerous symptoms in different patient groups. Future studies should pay even more attention to methodologically careful study planning (control groups, randomisation, adequate case numbers, blinding) to counteract bias.

On the one hand, I applaud the authors. Considering the popularity of Kneipp therapy in Germany, such a review was long overdue. On the other hand, I am somewhat concerned about their conclusions. In my view, they are far too positive:

- almost all studies had significant flaws which means their findings are less than reliable;

- for most indications, there are only one or two studies, and it seems unwarranted to claim that Kneipp therapy is beneficial for numerous symptoms on the basis of such scarce evidence.

My conclusion would therefore be quite different:

Despite its long history and considerable popularity, Kneipp therapy is not supported by enough sound evidence for issuing positive recommendations for its use in any health condition.

Diabetic polyneuropathy is a prevalent, potentially disabling condition. Evidence-based treatments include specific anticonvulsants and antidepressants for pain management. All current guidelines advise a personalized approach with a low-dose start that is tailored to the maximum response having the least side effects or adverse events. Homeopathy has not been shown to be effective, but it is nevertheless promoted by many homeopaths as an effective therapy.

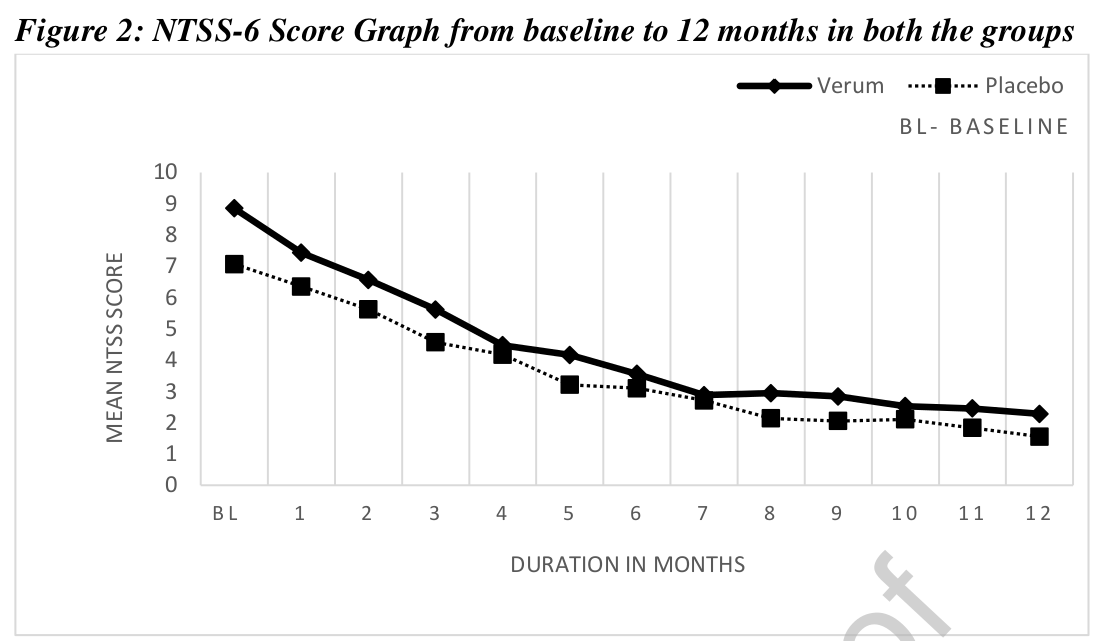

This study assessed the efficacy of individualized homeopathic medicines in the management of diabetic polyneuropathy. A multi-centric double-blind, placebo-controlled, randomized clinical trial was conducted by the Indian Central Council for Research in Homoeopathy at six centers with a sample size of 84. Based on earlier observational studies and repertorial anamnesis of DDSP symptoms 15 homeopathic medicines were shortlisted and validated scales were used for evaluating the outcomes post-intervention. The primary outcome measure was a change in Neuropathy Total Symptom Score-6 (NTSS-6) from baseline to 12 months. Secondary outcomes included changes in peripheral nerve conduction study (NCS), World Health Organization Quality of Life BREF (WHOQOL-BREF) and Diabetic Neuropathy Examination (DNE) score at 12 months.

Data of 68 enrolled cases were considered for data analysis. A statistically significant difference (p<0.014) was found in NTSS-6 post-intervention in the Verum group. A positive trend was noted for the Verum group as per the graph plotted for DNE score and assessment done for NCS. No significant difference was found between the groups for WHOQOL-Bref. Out of 15 pre-identified homeopathic medicines, 11 medicines were prescribed in potencies in ascending order from 6C to 1M.

The authors refrain from drawing conclusions about the efficacy of their homeopathic treatment (which is more than a little odd, as their stated aim was to assess the efficacy of individualized homeopathic medicines in the management of diabetic polyneuropathy). So, please allow me to do it for them:

The findings of this study confirm that homeopathy is a useless treatment.

Homeopaths believe that their remedies work for every condition imaginable and that naturally includes irritable bowel syndrome (IBS). But what does the evidence show?

The aim of this pilot study was to evaluate the efficacy of individualized homeopathic treatment in patients with IBS. The study was carried out at the National Homeopathic Hospital of the Secretary of Health, Mexico City, Mexico and included 41 patients: 3 men and 38 women, mean age 54 ± 14.89 years, diagnosed with IBS as defined by the Rome IV Diagnostic criteria. Single individualized homeopathics were prescribed for each patient, taking into account all presenting symptoms, clinical history, and personality via repertorization using RADAR Homeopathic Software. The homeopathic remedies were used at the fifty-millesimal (LM) potency per the Mexican Homeopathic Pharmacopoeia starting with 0/1 and increasing every month (0/2, 0/3, 0/6). Severity scales were applied at the beginning of treatment and every month for 4 months of treatment. The evaluation was based on comparing symptom severity scales during treatment.

The results demonstrated that 100% of patients showed some improvement and 63% showed major improvement or were cured. The study showed a significant decrease in the severity of symptom scores 3 months after the treatment, with the pain score showing a decrease already one month after treatment.

The authors state that the results highlight the importance of individualized medicine regimens using LM potency, although the early decrease in pain observed could also be due to the fact that Lycopodium clavatum and Nux vomica were the main homeopathic medicine prescribed, and these medicines contain many types of alkaloids, which have shown significant analgesic effects on pain caused by physical and chemical stimulation.

The authors concluded that this pilot study suggests that individualized homeopathic treatment using LM potencies benefits patients with IBS.

Where to begin?

Let me mention just a few rather obvious points:

- A pilot study is not for evaluating the efficacy, but for testing the feasibility of a definitive trial.

- The study has no control group, therefore the outcome cannot be attributed to the treatment but is most likely due to a mixture of placebo effects, regression towards the mean, and natural history of IBS.

- The conclusions are not warranted.

- The paper was published in the infamous Altern Ther Health Med.

Just to make sure that nobody is fooled into believing that homeopathy might nonetheless be effective for IBS. Here is what the Cochrane review on this subject tells us: no firm conclusions regarding the effectiveness and safety of homeopathy for the treatment of IBS can be drawn. Further high quality, adequately powered RCTs are required to assess the efficacy and safety of clinical and individualised homeopathy for IBS compared to placebo or usual care.

In my view, even the conclusion of the Cochrane review is odd and slightly misleading. The correct conclusion would have been something more to the point:

THE CURRENT TRIAL EVIDENCE FAILS TO INDICATE THAT HOMEOPATHY IS AN EFFECTIVE TREATMENT FOR IBS.

This study aimed to assess the feasibility of a future definitive trial, with a preliminary assessment of differences between effects of individualized homeopathic (IH) medicines and placebos in the treatment of cutaneous warts.

A double-blind, randomized, placebo-controlled trial (n = 60) was conducted at the dermatology outpatient department of the Homoeopathic Medical College and Hospital, West Bengal. Patients were randomized to receive either IH (n = 30) or identical-looking placebos (n = 30). The primary outcome measures were numbers and sizes of warts; the secondary outcome measure was the Dermatology Life Quality Index (DLQI) questionnaire measured at baseline, and every month up to 3 months. Group differences and effect sizes were calculated on the intention-to-treat sample.

Attrition rate was 11.6% (IH, 3; placebo, 4). Intra-group changes were significantly greater in the IH group than in the placebo group. Inter-group differences were statistically non-significant (all p > 0.05, Mann-Whitney U tests) with small effect sizes, both in the primary outcomes (number of warts after 3 months: IH median [interquartile range; IQR] 1 [1, 3] vs. placebo 1 [1, 2]; p = 0.741; size of warts after 3 months: IH 5.6 mm [2.6, 40.2] vs. placebo 6.3 [0.8, 16.7]; p = 0.515) and in the secondary outcomes (DLQI total after 3 months: IH 4.5 [2, 6.2] vs. placebo 4.5 [2.5, 8]; p = 0.935). Thuja occidentalis (28.3%), Natrum muriaticum (10%), and Sulphur (8.3%) were the most frequently prescribed medicines. No homeopathic aggravations or serious adverse events were reported.

The authors concluded that, as regards efficacy, the preliminary study was inconclusive, with a statistically non-significant direction of effect favoring homeopathy. The trial succeeded in showing that an adequately powered definitive trial is both feasible and warranted.

Partly the same group of authors recently published another trial of homeopathy with similar findings. At the time, I commented as follows:

We have come across this terminology before; homeopaths seem to like it. It prevents them from calling a negative trial by its proper name: A NEGATIVE TRIAL. In their view

- a positive trial is a study where homeopathy yields better results than placebo,

- a negative trial is a study where placebo yields better results than homeopathy,

- an inconclusive trial is a study where homeopathy yields results that are not significantly different from placebo.

Is this silly?

Yes, it is completely bonkers!

Is it dishonest?

Yes, in my view, it is.

Why is it done nonetheless?

Perhaps a glance at the affiliations of the authors provides an answer. And here is the list of the affiliations of the trialists of the present cutaneous wart study:

- 1Department of Repertory, D.N. De Homoeopathic Medical College and Hospital, Govt. of West Bengal, Tangra, Kolkata, West Bengal, India.

- 2D.N. De Homoeopathic Medical College and Hospital, Govt. of West Bengal, Tangra, Kolkata, West Bengal, India.

- 3Department of Organon of Medicine and Homoeopathic Philosophy, The Calcutta Homoeopathic Medical College and Hospital, Kolkata, West Bengal, India.

- 4Department of Practice of Medicine, The Calcutta Homoeopathic Medical College and Hospital, Kolkata, West Bengal, India.

- 5Department of Repertory, National Institute of Homoeopathy, Ministry of AYUSH, Govt. of India, Kolkata, West Bengal, India.

- 6Department of Organon of Medicine and Homoeopathic Philosophy, D.N. De Homoeopathic Medical College and Hospital, Govt. of West Bengal, Tangra, Kolkata, West Bengal, India.

- 7Department of Pediatrics, National Institute of Homoeopathy, Ministry of AYUSH, Govt. of India, Salt Lake, Kolkata, West Bengal, India.

- 8Department of Organon of Medicine and Homoeopathic Philosophy, State National Homoeopathic Medical College and Hospital, Lucknow, Uttar Pradesh.

- 9Independent Researcher; Champsara, Baidyabati, Hooghly, West Bengal, India.

- 10Independent Researcher, Shibpur, Howrah, West Bengal, India.

And, as before, this paper also contains this statement:

Conflict of interest statement

None declared.

Pre-hypertension, or stage 1 hypertension as it is also called, is usually defined as a systolic pressure reading between 120 mmHg and 139 mmHg, or a diastolic reading between 80 mmHg and 89 mmHg. It remains a significant public health challenge and appropriate intervention is required to stop its progression to hypertension and cardiovascular diseases.

This double-blind, randomized, two parallel arms, placebo-controlled study tested the effects of individualized homeopathic medicines (IH) against placebo in intervening with the progression of pre-hypertension to hypertension.

Ninety-two patients with pre-hypertension were randomized to receive either IH (n = 46) or identical-looking placebo (n = 46). Both IH or placebo were applied in the mutual context of lifestyle modification (LSM) advice including dietary approaches to stop hypertension (DASH) and brisk exercises.

The primary endpoints were systolic and diastolic blood pressures (SBP and DBP); secondary endpoints were Measure Yourself Medical Outcome Profile version 2.0 (MYMOP-2) scores. All endpoints were measured at baseline, and every month, up to 3 months.

After 3 months of intervention, the number of patients having progression from pre-hypertension to hypertension between groups was similar without any significant differences in between the groups. Reduction in BP and MYMOP-2 scores were also not significantly different. Lycopodium clavatum, Thuja occidentalis and Natrum muriaticum were the most frequently prescribed medicines. No serious adverse events were reported from either group.

The authors concluded that there was a small, but non-significant direction of effect favoring homeopathy, which ultimately rendered the trial as inconclusive.

We have come across this terminology before; homeopaths seem to like it. It prevents them from calling a negative trial by its proper name: A NEGATIVE TRIAL. In their view

- a positive trial is a study where homeopathy yields better results than placebo,

- a negative trial is a study where placebo yields better results than homeopathy,

- an inconclusive trial is a study where homeopathy yields results that are not significantly different from placebo.

Is this silly?

Yes, it is completely bonkers!

Is it dishonest?

Yes, in my view, it is.

Why is it done nonetheless?

Perhaps a glance at the affiliations of the authors provides an answer:

- 1Dept. of Organon of Medicine and Homoeopathic Philosophy, D. N. De Homoeopathic Medical College and Hospital, Kolkata, West Bengal, affiliated to The West Bengal University of Health Sciences, Govt. of West Bengal, India. Electronic address: [email protected].

- 2Dept. of Organon of Medicine and Homoeopathic Philosophy, D. N. De Homoeopathic Medical College and Hospital, Kolkata, West Bengal, affiliated to The West Bengal University of Health Sciences, Govt. of West Bengal, India.

- 3Principal and Administrator D. N. De Homoeopathic Medical College and Hospital, Kolkata, West Bengal, affiliated to The West Bengal University of Health Sciences, Govt. of West Bengal, India.

- 4Dept. of Practice of Medicine, D. N. De Homoeopathic Medical College and Hospital, Kolkata, West Bengal, affiliated to The West Bengal University of Health Sciences, Govt. of West Bengal, India.

- 5Dept. of Practice of Medicine, Mahesh Bhattacharyya Homoeopathic Medical College and Hospital, Howrah, Govt. of West Bengal, affiliated to The West Bengal University of Health Sciences, Govt. of West Bengal, India.

- 6Dept. of Organon of Medicine and Homoeopathic Philosophy, National Institute of Homoeopathy, Block GE, Sector III, Salt Lake, Kolkata 700106, West Bengal, India; affiliated to The West Bengal University of Health Sciences, Govt. of West Bengal, India.

- 7Dept. of Organon of Medicine and Homoeopathic Philosophy, State National Homoeopathic Medical College and Hospital, Lucknow, Govt. of Uttar Pradesh, affiliated to Dr. Bhimrao Ramji Ambedkar University, Agra, Govt. of Uttar Pradesh), India.

- 8Dept. of Repertory, D. N. De Homoeopathic Medical College and Hospital, Kolkata, West Bengal, affiliated to The West Bengal University of Health Sciences, Govt. of West Bengal, India.

Despite these multiple conflicts of interest, the article carries this note:

“Declaration of Competing Interest: None declared.”

In their 2019 systematic review of spinal manipulative therapy (SMT) for chronic back pain, Rubinstein et al included 7 studies comparing the effect of SMT with sham SMT.

They defined SMT as any hands-on treatment of the spine, including both mobilization and manipulation. Mobilizations use low-grade velocity, small or large amplitude passive movement techniques within the patient’s range of motion and control. Manipulation uses a high-velocity impulse or thrust applied to a synovial joint over a short amplitude near or at the end of the passive or physiological range of motion. Even though there is overlap, it seems fair to say that mobilization is the domain of osteopaths, while manipulation is that of chiropractors.

The researchers found:

- low-quality evidence suggesting that SMT does not result in a statistically better effect than sham SMT at one month,

- very low-quality evidence suggesting that SMT does not result in a statistically better effect than sham SMT at six and 12 months.

- low-quality evidence suggesting that, in terms of function, SMT results in a moderate to strong statistically significant and clinically better effect than sham SMT at one month. Exclusion of an extreme outlier accounted for a large percentage of the statistical heterogeneity for this outcome at this time interval (SMD −0.27, 95% confidence interval −0.52 to −0.02; participants=698; studies=7; I2=39%), resulting in a small, clinically better effect in favor of SMT.

- very low-quality evidence suggesting that, in terms of function, SMT does not result in a statistically significant better effect than sham SMT at six and 12 months.

This means that SMT has effects that are very similar to placebo (the uncertain effects on function could be interpreted as the result of residual de-blinding due to a lack of an optimal placebo or sham intervention). In turn, this means that the effects patients experience are largely or completely due to a placebo response and that SMT has no or only a negligibly small specific effect on back pain. Considering the facts that SMT is by no means risk-free and that less risky treatments exist, the inescapable conclusion is that SMT cannot be recommended as a treatment of chronic back pain.

This multicenter, randomized, sham-controlled trial was aimed at assessing the long-term efficacy of acupuncture for chronic prostatitis/chronic pelvic pain syndrome (CP/CPPS). Men with moderate to severe CP/CPPS were recruited, regardless of prior exposure to acupuncture. They received sessions of acupuncture or sham acupuncture over 8 weeks, with a 24-week follow-up after treatment. Real acupuncture treatment was used to create the typical de qi sensation, whereas the sham acupuncture treatment (the authors state they used the Streitberger needle, but the drawing looks more as though they used our device) does not generate this feeling.

The primary outcome was the proportion of responders, defined as participants who achieved a clinically important reduction of at least 6 points from baseline on the National Institutes of Health Chronic Prostatitis Symptom Index at weeks 8 and 32. Ascertainment of sustained efficacy required the between-group difference to be statistically significant at both time points.

A total of 440 men (220 in each group) were recruited. At week 8, the proportions of responders were:

- 60.6% (95% CI, 53.7% to 67.1%) in the acupuncture group

- 36.8% (CI, 30.4% to 43.7%) in the sham acupuncture group (adjusted difference, 21.6 percentage points [CI, 12.8 to 30.4 percentage points]; adjusted odds ratio, 2.6 [CI, 1.8 to 4.0]; P < 0.001).

At week 32, the proportions were:

- 61.5% (CI, 54.5% to 68.1%) in the acupuncture group

- 38.3% (CI, 31.7% to 45.4%) in the sham acupuncture group (adjusted difference, 21.1 percentage points [CI, 12.2 to 30.1 percentage points]; adjusted odds ratio, 2.6 [CI, 1.7 to 3.9]; P < 0.001).

Twenty (9.1%) and 14 (6.4%) adverse events were reported in the acupuncture and sham acupuncture groups, respectively. No serious adverse events were reported. No significant difference was found in changes in the International Index of Erectile Function 5 score at all assessment time points or in peak and average urinary flow rates at week 8.

The authors concluded that, compared with sham therapy, 20 sessions of acupuncture over 8 weeks resulted in greater improvement in symptoms of moderate to severe CP/CPPS, with durable effects 24 weeks after treatment.

The study was sponsored by the China Academy of Chinese Medical Sciences and the National Administration of Traditional Chinese Medicine. The trialists originate from the following institutions:

- 1Guang’anmen Hospital, China Academy of Chinese Medical Sciences, Beijing, China (Y.S., B.L., Z.Q., J.Z., J.W., X.L., W.W., R.P., H.C., X.W., Z.L.).

- 2Key Laboratory of Chinese Internal Medicine of Ministry of Education, Dongzhimen Hospital, Beijing University of Chinese Medicine, Beijing, China (Y.L.).

- 3ThedaCare Regional Medical Center – Appleton, Appleton, Wisconsin (K.Z.).

- 4Hengyang Hospital Affiliated to Hunan University of Chinese Medicine, Hengyang, China (Z.Y.).

- 5The First Hospital of Hunan University of Chinese Medicine, Changsha, China (W.Z.).

- 6Guangdong Provincial Hospital of Traditional Chinese Medicine, Guangzhou, China (W.F.).

- 7The First Affiliated Hospital of Anhui University of Chinese Medicine, Hefei, China (J.Y.).

- 8West China Hospital of Sichuan University, Chengdu, China (N.L.).

- 9China Academy of Chinese Medical Sciences, Beijing, China (L.H.).

- 10Yantai Hospital of Traditional Chinese Medicine, Yantai, China (Z.Z.).

- 11Shaanxi Provincial Hospital of Traditional Chinese Medicine, Xi’an, China (T.S.).

- 12The Third Affiliated Hospital of Zhejiang Chinese Medical University, Hangzhou, China (J.F.).

- 13Beijing Fengtai Hospital of Integrated Traditional and Western Medicine, Beijing, China (Y.D.).

- 14Xi’an TCM Brain Disease Hospital, Xi’an, China (H.S.).

- 15Dongfang Hospital Beijing University of Chinese Medicine, Beijing, China (H.H.).

- 16Luohu District Hospital of Traditional Chinese Medicine, Shenzhen, China (H.Z.).

- 17Guizhou University of Traditional Chinese Medicine, Guiyang, China (Q.M.).

These facts, together with the previously discussed notion that clinical trials from China are notoriously unreliable, do not inspire confidence. Moreover, one might well wonder about the authors’ claim that patients were blinded. As pointed out above, the real and sham acupuncture were fundamentally different: the former did generate de qi, while the latter did not! A slightly pedantic point is my suspicion that the trial did not test the efficacy but the effectiveness of acupuncture, if I am not mistaken. Finally, one might wonder what the rationale of acupuncture as a treatment of CP/CPPS might be. As far as I can see, there is no plausible mechanism (other than placebo) to explain the effects.

So, is the evidence that emerged from the new study convincing?

No, in my view, it is not!

In fact, I am surprised that a journal as reputable as the Annals of Internal Medicine published it.